How to improve code performance in 5 steps?

While looking for inspiration to write this post, I ran into a help request on a popular PHP community forum.

Some guy wrote a command that pulled several thousand users from the database and calculated something for them. Each user had several related objects. It wasn’t difficult to predict that memory would run out.

PHP Fatal error: Allowed memory size of 134217728 bytes exhausted (tried to allocate 123456 bytes).

When he showed some snippets of code, the problem became obvious. Some time ago, I had a similar situation.

Sometimes during the lifecycle of the project, you need to recalculate/migrate/reset some values again. It's easy when you can do it on the database level, but sometimes, it's impossible. What do we usually do in this case? We write a simple PHP script. If we're working with Symfony, this could be a command or controller triggered manually somewhere in the admin panel.

What problem could we have with such a script? If you don't have any bad experience related to OOM (out of memory), you probably won't pay attention to something like a memory while developing web application :). What's more, if you have "memorylimit = 8192M" set locally (or in the dev docker container), then on production (you're lucky if it's a preprod) there is a surprise “memorylimit = 512M”.

Background

So let's think of an example. We have a blog where anyone can create an account and add a comment to the post. We hope our users are trustworthy, they read the rules, and won’t use bad words. But it isn't the case, and not everyone is following the rules. We decide to censor comments.

Exemplar solution to a problem (I’m working on clean Symfony 5 with the default configuration, and Doctrine ORM 2.7):

protected function execute(InputInterface $input, OutputInterface $output)

{

$comments = $this->commentRepository->findAll();

foreach ($comments as $comment) {

$this->censor($comment);

$this->entityManager->flush();

}

}

private function censor(Comment $comment) : void {

// censor here

}It’s a Symfony command that pulls out all comments and sequentially censors them if necessary. Looks good? The programmer wrote it, deployed to the preprod server, executed, and then you got “out of memory” notification. "Strange, it worked for me" - he replied .

Of course, if you have a few comments, it will work and you won’t notice anything wrong. But if you have 200k comments, then you have a problem.

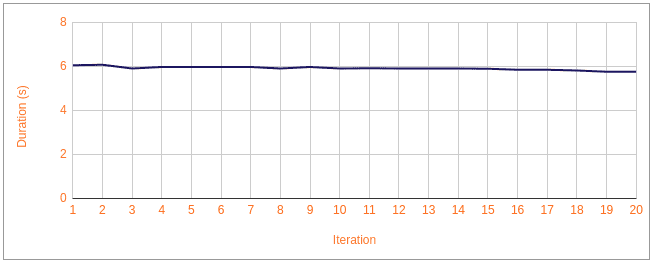

In Symfony, for example we can use Stopwatch1 component to measure code execution time and memory used. I measured command and printed the results in the console:

Initial: Memory usage - 14.00 MB, Duration - 0.00 s

findAll(): Memory usage - 676.50 MB, Duration - 13.23 s

Comment 1: Memory usage - 676.50 MB, Duration - 6.06 s

Comment 2: Memory usage - 676.50 MB, Duration - 6.09 s

Comment 3: Memory usage - 676.50 MB, Duration - 5.91 s

Comment 4: Memory usage - 676.50 MB, Duration - 5.98 s

...

Comment 19: Memory usage - 676.50 MB, Duration - 5.77 s

Comment 20: Memory usage - 676.50 MB, Duration - 5.77 sscript duration (s):

script memory usage (MB):

script memory usage (MB):

In the example mentioned, fetching all comments from the database took 13s and consumed 676MB of memory. Censoring every single comment would have taken 6 seconds and almost two weeks to finish! But what if 676MB is more than we have available? We need to do something about it.

Paginator

The first thing to improve is to get rid of fetching all comments at once. All 200k comments, unnecessarily, are waiting in line, blocking the memory. From version 2.2 Doctrine provides a tool like a query paginator 2.

So I prepared the method to get the paginator. It creates a query builder with limit and offset, pushing it into the Paginator object:

private function getPaginator($limit = 200, $offset = 0): Paginator

{

$qb = $this->commentRepository->createQueryBuilder('c');

$qb

->setMaxResults($limit)

->setFirstResult($offset);

return new Paginator($qb);

}And use it in command:

protected function execute(InputInterface $input, OutputInterface $output)

{

$offset = 0;

$limit = 200;

$comments = $this->getPaginator($limit, $offset);

$counter = $comments->count();

while ($offset < $counter) {

foreach ($comments as $comment) {

$this->censor($comment);

$this->entityManager->flush();

}

$offset += $limit;

$comments = $this->getPaginator($limit, $offset);

}

}What do we have here? A defined initial offset and limit. The limit should be selected individually, depending on the needs. In this case, I set 200 at the beginning. After that, I created a paginator object (example above). The paginator has a count() method that returns the number of all results. After that, in the loop, we pull out 200 results and work with them.

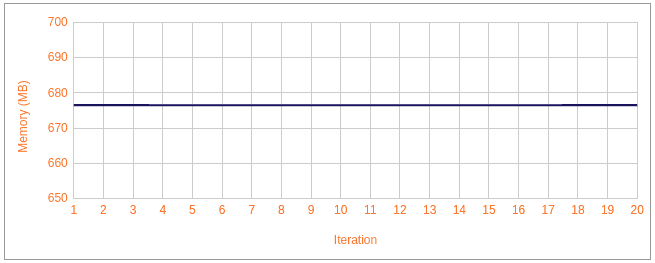

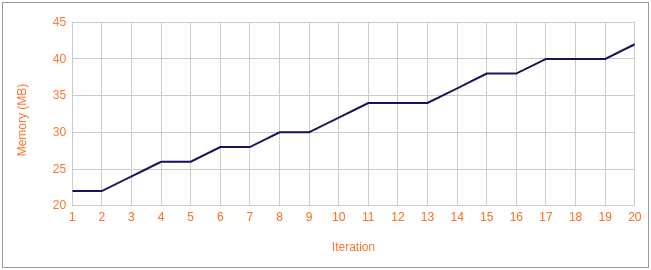

Let's check what such changes gave us. I added the measurement of each loop, here are the results for the first 20 iterations (4k comments from 200k):

Initial: Memory usage - 16.00 MB, Duration - 0.00 s

Before loop: Memory usage - 20.00 MB, Duration - 0.10 s

----------- ------------------- --------------

Iteration Memory usage (MB) Duration (s)

----------- ------------------- --------------

1 18 1.80

2 18 3.06

3 20 4.10

4 20 5.20

5 20 6.55

(...)

9 24 11.02

10 24 12.22

11 26 13.28

12 26 15.51

(...)

15 26 18.21

16 28 19.70

...

19 28 22.43

20 30 23.64

----------- ------------------- --------------script duration (s):

script memory usage (MB):

script memory usage (MB):

It is better with a paginator because everything works much faster and uses significantly less memory. But we have two problems. First, the time increases with each iteration, and secondly, there is a memory leak (more of it is taken with each iteration). You can notice that every 200 comments need an extra 0,6 MB of memory. Assuming this growth rate, 620MB of memory would be enough. But the time with each iteration increases by 1.16s, so it would take almost 7 days to handle all. We don't have that much time, we need to figure out something better.

Flush wisely

As you can see in the example with the Paginator, the Doctrine method flush() is used after every single comment change.

What does flush() do?

In Symfony, object update, insertion (persist) or deletion (remove) is not immediately synchronized with the database. To synchronize it, we need to call the flush() method from the EntityManger class. It will call commit() from UnitOfWork3. This method is (from the doc): "executing all operations that have been postponed up to this point. The state of all managed entities will be synchronized with the database.". Here [link] you can see how it looks (I hope you don't see it for the first time!).

In our example, calling flush() after each comment change, will cause a separate transaction with the update query in the database. Let's try to change it to one flush for one iteration:

while ($offset < $counter) {

foreach ($comments as $key => $comment) {

$this->censor($comment);

}

$this->entityManager->flush();

…

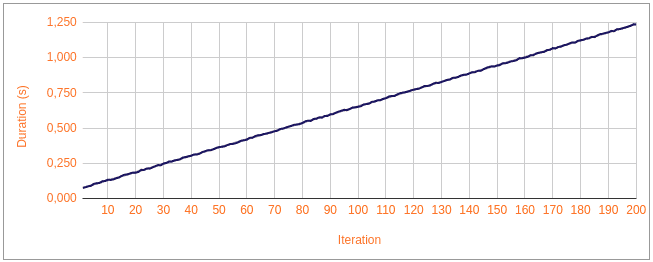

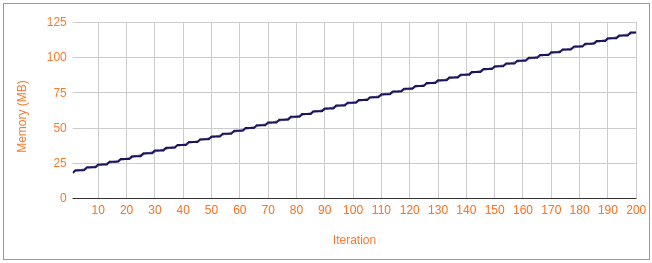

}Now the results:

Initial: Memory usage - 14.00 MB, Duration - 0.00 s

Before loop: Memory usage - 16.00 MB, Duration - 0.09 s

Iteration Memory usage (MB) Duration (s)

----------- ------------------- --------------

1 18 0.07

2 18 0.064

3 20 0.07

4 20 0.092

5 20 0.084

6 22 0.089

(...)

95 66 0.631

96 66 0.619

97 66 0.628

98 66 0.631

99 68 0.644

100 68 0.672

(...)

194 112 1.2

195 112 1.208

196 112 1.239

197 114 1.215

198 114 1.228

199 114 1.241

200 114 1.233

----------- ------------------- --------------script duration (s):

script memory usage (MB):

script memory usage (MB):

If we look at the time - we can see improvement. At this rate, it would take about 50 minutes for all comments. However, time is still getting longer with each iteration, which is not good. There is also a slight improvement in memory consumption, but the memory leak is still too big, and we would need about 500 MB to operate. In the end, it is taking too much time, and too much memory is needed.

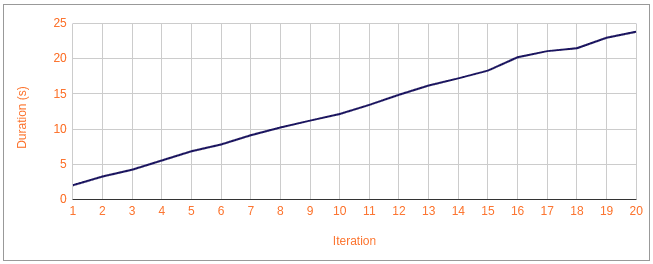

Clean up after yourself.

After calling flush(), UnitOfWork does not clear itself by default. It still keeps changes made and objects managed.

Profiling my command, I noticed that flush() is taking more and more time with each iteration. This is the source of the problem. EntityManager has a clear()4 method that clears all synchronized and unsynced changes that were previously kept in UnitOfWork.

Let's clean UnitOfWork after each iteration.

while ($offset < $counter) {

foreach ($comments as $key => $comment) {

$this->censor($comment);

}

$this->entityManager->flush();

$this->entityManager->clear();

…

}Now the results for all 200k comments:

Initial: Memory usage - 14.00 MB, Duration - 0.00 s

Before loop: Memory usage - 16.00 MB, Duration - 0.09 s

------------------- --------------

Memory usage (MB) Duration (s)

------------------- --------------

34 75.51

------------------- --------------Finally, time stops growing every iteration! At this rate, the whole command took "only" 75 seconds. When it comes to memory, we've reduced total consumption to 34MB.

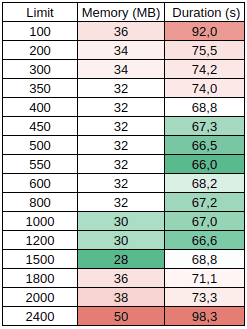

Adjust

Can we improve anything else? For example, we can adjust the paginator. I chose the limit quite randomly at the beginning. I was tempted to check how time and memory are depending on the selected paginator limit.

Based on these results, I can conclude that the best results for duration are for the limit between 400 and 1500. Outside this range, the duration is increasing rapidly. Memory results are not significantly different from 100 to the 2000 limit. Above that, memory begins to be used faster. However, the best results for memory were for the limit between 1000 and 1500. So the best option in my example will probably be to choose a limit between 1000 and 1200 (comments in single iteration).

We started with 676MB of memory usage and around two weeks needed to complete the command. We've managed to improve this result up to 30MB and 67 seconds!

Accesto is a boutique software house focused on software craftsmanship and partnership. Founded in 2010 we have helped over 50 projects to overcome their technical challenges and outpace competition.

LEARN MOREHow to speed up the code in 5 easy steps?

If you are writing a command that has to a large amount of data, consider the following steps, that will ensure good performance and optimized memory usage:

1. Plan your code

Make sure your code is well planned. Some things you can do before others, to avoid repeating the same actions. Remember that the connection to the database takes a while, and it is not worth querying the database for each item separately, you can sometimes group queries.

2. Paginte

Don’t do everything at once, if you have a lot of data to process, split them into packages and operate on smaller pieces, it will save you some memory.

3. Flush wisely

Doctrine flush() synchronizes the objects you have changed with their state in the database. Remember that you do not have to do it every time you change something, because it is time-consuming. But do not think that it is best to do it at once in the end, then it will also take longer, because of the need to synchronize a big amount of data.

4. Clear when you can

Doctrine keeps data in UnitOfWork even after flush(). With each portion of processed data, the size of the data storage increases, it is worth getting rid of them with clear(), it will allow you to free up some memory space. But be careful, think it through carefully, because you may lose the data you will need in the future.

5. Adjust your script

You must select the size of the data portion that you will process in one iteration. In this way, you will improve the script duration and reduce memory usage.

What else?

Maybe one of those questions will help you to solve your performance problem:

- are you running the command in prod mode :)?

- have you installed dependencies with autoloader optimization?

- maybe in some cases it is better to execute a clean query to the database instead of putting the whole ORM to work?

- someone could do a code review for you? Maybe he would catch something you can't see.

Make sure also to check our blog post on Top 5 hacks to fix slow web applications.

Need further help?

In Accesto we focus on solving deep issues within existing web applications. Feel free to contact us and we will help you optimize performance of your web product.

-

https://symfony.com/doc/5.0/components/stopwatch.html

↩ -

https://www.doctrine-project.org/projects/doctrine-orm/en/2.7/tutorials/pagination.html

↩ -

https://www.doctrine-project.org/projects/doctrine-orm/en/2.7/reference/working-with-objects.html

↩ -

https://github.com/doctrine/orm/blob/2.7/lib/Doctrine/ORM/UnitOfWork.php#L2497

↩