My homepage is slowing down

Recently, I was asked to help one team struggling with site performance getting very low. The site response times increased extremely from average 3 seconds to 8-10 seconds per each request for the homepage. Client has started complaining about the page slowing down. Everyone knows what it means. In most cases, users abandon using such slow services. I had to do something really quickly to improve site speed.

All pages were quite slow but the first thing to do was to optimize the homepage as most frequently visited. It was an ecommerce project. Homepage contains 3 blocks of products (recent, best sellers and promoted). Not much, don’t you think? Continue reading.

Blackfire in action

Before I started inspecting the code I have used blackfire - a popular tool for profiling web applications. Blackfire installation is pretty easy. Here is a simple guide on how to do it: blackfire installation.

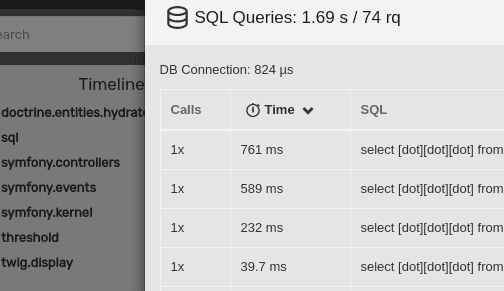

Free version is limited in functionality and you can't use it on the server. I used the profiler version and the report was very beneficial. The load time was 7.61 second. My first thought was that sql queries were so much wrong but hey, look at this:

Limit the results

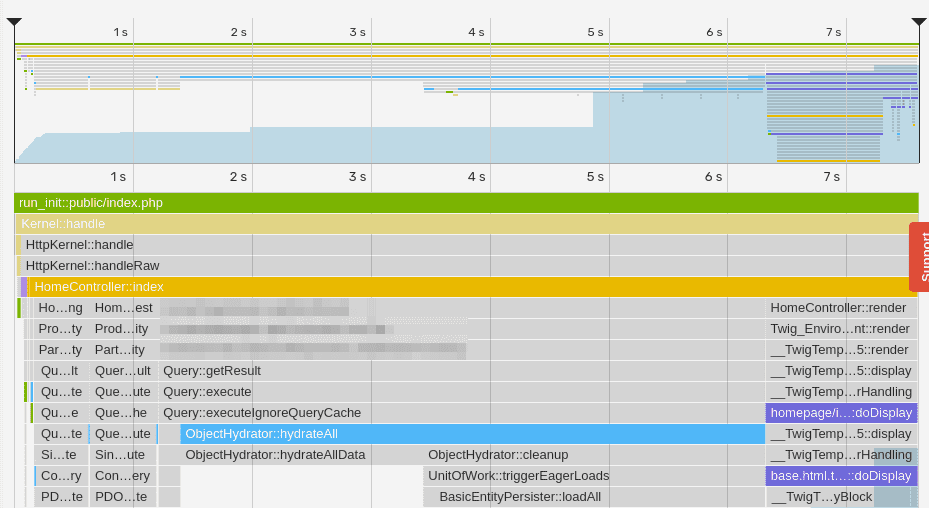

Obviously three queries were executing too long but not too long enough to make 7.61s. It was 1.69s and if you do the math, you will still have 5.92 seconds lost somewhere. Let’s look at the timeline:

Object hydrator was taking most of the time. I noticed that it was getBestOffers method that was taking too long. Finally I have checked the code and I have found:

$result = $queryBuilder

->getQuery()

->getResult();

return \array_slice($result, 0, $limit);OK, so I have changed that to:

return $queryBuilder

->getQuery()

->setMaxResults($limit)

->getResult();With this small change I reduced time from 7.61 to 2.69s. No hurray yet but I just have improved the site speed over 64% in 5 minutes of developing time.

Enable doctrine cache

I had still at least 2 seconds to cut off. As you remember three queries were taking 1.69 sec. Those queries were very complex. There were many joins and subselects. The logic was really complex. The project does not have any acceptance tests and no clear documentation how things should work. So I needed to do something quick. I decided to use a doctrine cache.

With the product owner and team we decided that five minutes cache will be the best value and there will not be any harm for UX. You should always inform the product owner about such changes. They really need to know what are disadvantages of using cache. So I added:

return $queryBuilder

->getQuery()

->useResultCache(true, Cache::SHORT_TTL_CACHE)

->setMaxResults($limit)

->getResult();I refreshed the page and I realized that it did not help at all. Sql queries were still being executed. My first thought was that the cache is not working properly. I have checked the queries and found that there is a constantly changing parameter in the query. There were some very complex entity relations and to simplify it: Product has an expiration date column. The column type was set to date (e.g. 2020-02-10). In query param I have found that:

->andWhere('p.expirationDate >= :today')

->setParameter('today', new \DateTime())I have changed it to:

->andWhere('p.expirationDate >= :today')

->setParameter('today', (new \DateTime())->format('Y-m-d'))Guess what? It worked. Here is what I have achieved = 1.23seconds. I cut 6.38 seconds within a couple minutes of work and I added around 10 lines of code and removed one line.

Static resource vs database query

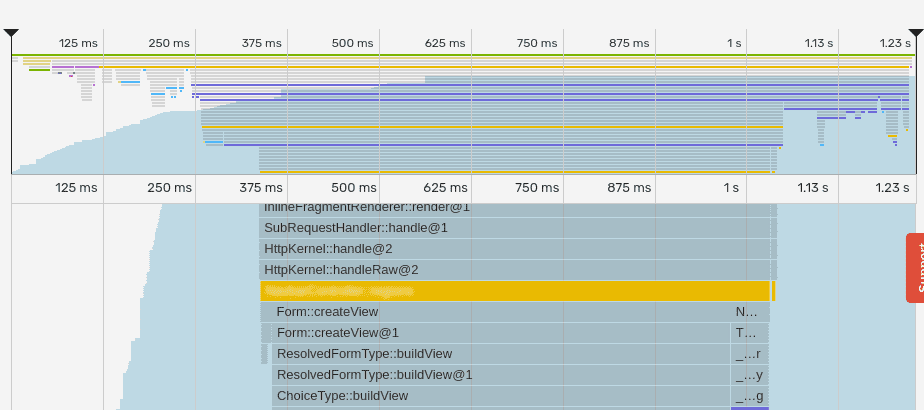

Still, the response time was too long. I check blackfire again and I found that:

The part was responsible for generating a form to select an active city. Query was really fast but the object hydrator was taking time to build objects. I have noticed that select input is using select2 javascript library. Here is a link if you are not familiar with it https://select2.org/.

All those cities names (around 1k results) were loaded on each request from the database using also memory to build entities objects. How often do cities change? Not often.

Doctrine cache would be fine here but I decided to go further. I dumped those cities into a JSON file. I had already select2 implementation here. When a new city is founded, the JSON file will be regenerated. Meanwhile users get a static json resource with a list of cities.

Finally, I have managed to reduce from 2.69 to below 0.6 and that is over 77% response time decrease. Dump cities to json file was triggered by any city change. The implementation was not as easy as previous changes but took me around 4 hours with testing.

Quick win

Changing cities to static json was the most time-consuming change. In summary those changes took me a couple of hours. Finally I managed to decrease response time from 7610ms to 557ms that is almost 93% of site speed in 4 hours of developing time. What is more, I did it and I did not know much about business logic of application. I did not change the way the results were taken from the database. I just used some simple tricks to quickly speed the page up.

For more tricks like this see the blog post of our CTO on Top 5 hacks to fix slow web applications. Or if you need assistance, just contact us - in Accesto we specialize in improving existing php applications and will be glad to help you.