Strangler Pattern in practice

This week I finally hit the delete button. I've been waiting for this moment for the last 3 years. Finally, I erased the legacy part of the codebase I was working on! Using a strangler pattern, we got to the point where the legacy SaaS application was completely replaced with a new shiny web application that everyone wants to work on and develop. But let’s start from the beginning.

Who likes working with legacy code? You may not believe it, but I do. It is always a challenge. Working on web application refactoring is usually archeology combined with graphology and patience training. Moreover, it is a job for the brave, let's be honest, how many legacy projects have tests? But if they do, these are usually red, and had been red a long time before we took over the project. If you change something in one place of a legacy code, you never know whether it won't break anything in another place that you don't know about, just because you haven't dug into it yet. Fixing a bug that needs to be fixed in the legacy code is similar to playing with dominoes. You fix one thing, and it causes you to discover another bug, and another, and another. And this way, from fixing one simple bug, it turns out you've changed 60 files. And when you finally deploy changes, you sweat while waiting for something to explode.

But don't worry, it is not the way we work with legacy code. At Accesto, we specialize in difficult cases. We usually take over the projects that others were afraid of. We like legacy, we usually live in peace with legacy code. But how is it even possible? How to deal with the legacy without going nuts?? Fortunately, there are a few ways to do it and maintain sanity. For example a Legacy can be tamed, Krzysiek showed it in his recent article. I'll show you another way.

So let's start from the beginning.

We took over a several-years old SaaS product that had already achieved business success in its market. But this success came with hidden costs, the cost of a technological debt (the costs of technical debt are described in detail by our CEO Piotr). It was a classic case, it was taking more and more time and effort to introduce new features, users were waiting a long time for bug fixes, and the entire website was working slower and slower day by day. Technical debt was so high that the previous developers quit their jobs.

The client, after a long search for someone who would like to help him, came to us.

Let's do a quick audit of what we got:

- files with 2k+ lines of code? ✓

- copy-paste driven development? ✓

- hardcoded secret keys in code? ✓

- PHP/HTML/CSS/SQL/JS in single file? ✓

- code versioning with file names ✓

- vulnerability to SQL injection, XSS, IDOR, etc.? ✓

- FTP deployment? ✓

- spaghetti code? (everywhere) ✓

- and many more, it was not good, to sum up - a big ball of mud. ✓

- SOLID princinples? forget it, that would require using at least some OOP ;-)

How did we approach it? To be honest, we didn't have much experience with the strangler pattern that time. We did have many successful SaaS refactoring projects behind us, but not with that approach. So where did we start?

Versioning

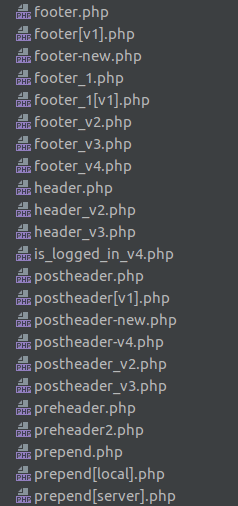

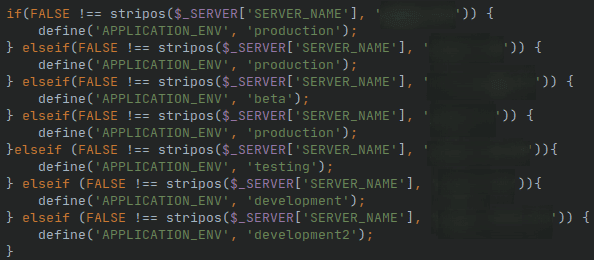

This legacy SaaS had a very peculiar way of versioning the code.

To make it funnier, the "latest version" was not always used everywhere. For example, the login page used "footer_v4.php" and the password reset page used "footer_v2.php", etc. So we couldn't just delete "older versions" that we thought were unused because there were "newer versions".

Copy-paste driven development + useful comments:

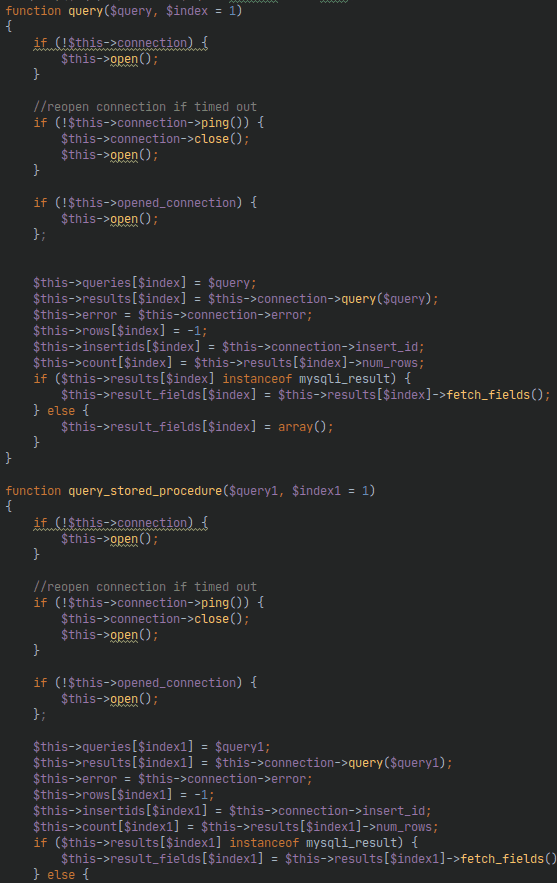

Anyone can make a bit of copy-paste. But "a bit" is crucial here. What we saw there deserved a master's degree in copy-pasting.

You won't find a significant difference between the two methods above. The entire system was based on the known methodology of "copy-pasting". How can such things be cleaned? I described an example in the article about "boy scout rule". But unfortunately, we couldn't put on rubber gloves and start cleaning, the strangler pattern is not about legacy refactoring. We tried to close legacy in a box and slowly replaced the old functions one by one until we could finally delete the "/legacy" directory. We tried not to touch the legacy code as long as we could. Unfortunately, it was not always possible, sometimes (actually very often at the beginning) the "urgent error" comes up. Then, as in the old times, we had to find and fix the bug (it is not easy in two-thousand-liners).

If you think leaving French comments in the code is okay, go to hell.

What options do we have?

- keep going with legacy saas - add new features, fix reported bugs just like the developers who have just quit...

- work on SaaS refactoring - slowly improve code quality, improving legacy php...

- rewrite from scratch - favorite of all programmers but not business!

Some other ideas?

All of the above ways are hopeless. We wanted to try something different, something that will satisfy everyone, programmers, users and the business. And that is why we decided to use the strangler pattern.

What is the strangler pattern? In a nutshell, it’s incrementally replacing individual functionalities of the legacy system with new ones. Over time, nothing will be left of the legacy and the new system will replace all its functions.

Sounds trifle, doesn't it? So where to start?

Disclaimer: I would just like to point out that this is not a tutorial, this is a story of what we did some time ago. Could it be done better? For sure, and now we would have done some things differently. But we didn't go that wrong either.

Repository

We only got FTP access, so we need to civilize it. We've added code versioning (GIT). I was the unfortunate one who became the "author" of the entire legacy. I do not need to explain this, each of you knows that it is impossible to work without versioning. Simple, a new repository, git add . without hesitation git commit -m "clean legacy project" and git push. That's it.

Environment

To be able to work, we had to prepare a development environment. We put all the legacy code in a separate directory. Guess how it was named… yes you’re right "/legacy". After that, we deleted files that should not be in the repository like "/upload/*", something like a "cache", etc. Later we found a lot of useless files, but at this stage, we were not sure what was needed and what was not. Finally, we could create a simple Docker setup to run the project. Two containers on start, one for database and one for the legacy SaaS.

Facade

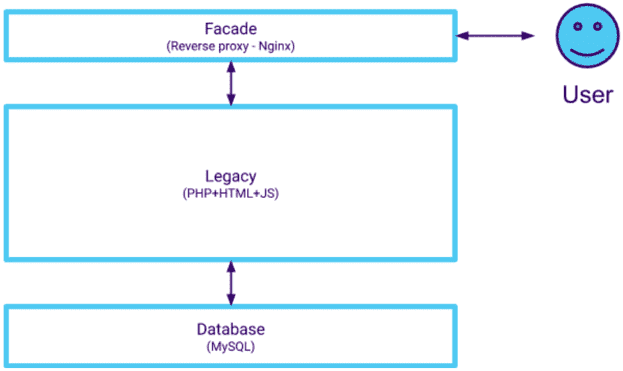

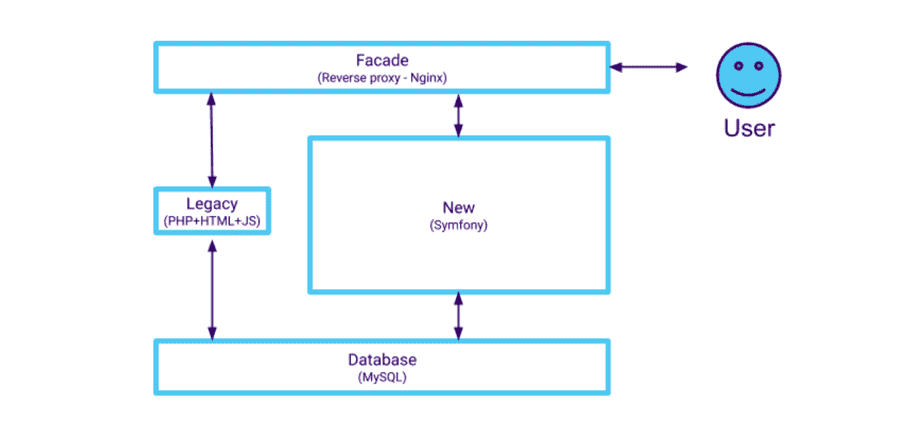

According to the strangler pattern - Gateway/Facade. We added the nginx reverse proxy (another option would be to use Traefik reverse proxy), in the beginning, all traffic just went to the legacy container. The architecture looked like this:

Thus, we have taken the first step of the strangler pattern in practice.

New

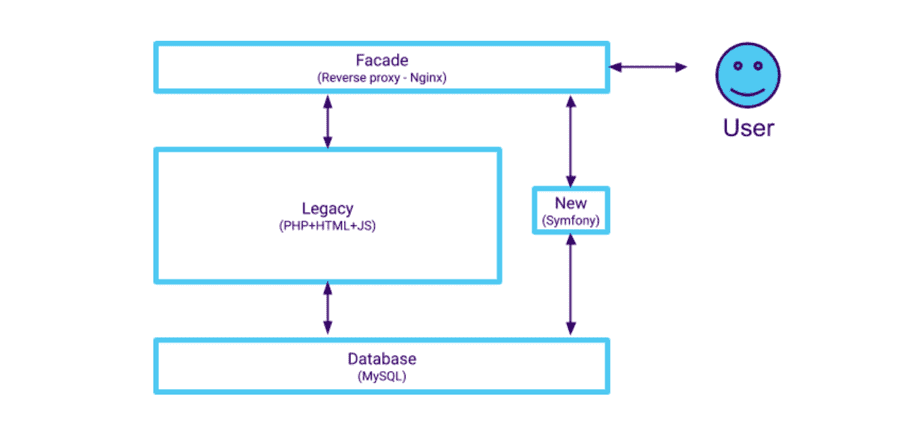

Time for something enjoyable. We have to prepare a place for new features. We create a new project next to the legacy. The new, clean project, everyone likes them - the greenfield. We could choose what technologies we wanted, awesome. We chose what we are good at - a Symfony framework.

We started the easiest way - monorepo - next to the legacy we created the "/new" directory with Symfony and dockerize it. Now the structure of our project looked like this:

In the schema above, the size of the "new" and "legacy" boxes matters. All traffic was still routed across the gateway to the legacy. But we had a new container with clean Symfony that was connected to the same database as the legacy.

Let's stop here for a moment.

Database

We decided at that point to use the same database. Using the legacy database in the new part is actually an anti-pattern. We were aware of it, and despite that, we chose this solution. Why is it wrong? The boundary where the "New" and "Legacy" meet must be clear and transparent. When we use one database, we lose these boundaries. You don't see what is used by which part. It is not clear what can be changed and removed, what dependencies you have. The existing structure imposes a data model on you, so you are not able to create optimal solutions, you only use what you have. You improve the code by rewriting functionalities, but you are sometimes blocked by the existing data structure. Finally, you end up with a database where some of the tables are new, they are yours and you are proud of them, but they have relations to the old ugly ones, besides, there are many tables in the database that you don't even know what they're for. And worse, you don't know if you can delete them, because maybe something in legacy is using them, or maybe something in "new" is using it somewhere?

So, how should it be done in a perfect world?

Boundaries should be clearly delineated so it is best if the database is separate.

How can this be achieved? Synchronization.

- Cyclic synchronization - it can be a script that synchronizes the databases cyclically, for example once every day.

- Events - Legacy can dispatch events based on which New will update its copy of the data. But that requires legacy changes, and that can be tough.

- DB Triggers - we can synchronize at the database level and use, for example use database triggers.

- Transaction log - each transaction in the database leaves a log. We can use these logs and recreate them in a second database. For example, we can use Binlog + Kafka for this.

We summed up the pros and cons and decided to go single database. We had no experience with this pattern then, so we didn't know why it was wrong yet. As proof of the concept, we wanted to have a working project quickly so that we could test it in practice, and connecting directly to the Legacy base is quick and convenient. This has caused problems several times. Relationships and suboptimal model structure were the most problematic. We survived, the project survived it too, we finally managed to improve it all, but today we'd think twice or even three times before using one database.

Initial dump

Speaking of the database. Legacy as legacy did not use the full ORM (just something that pretended to be ORM), did not use migration and had no documentation.

All we had was production access to the database.

In order to be able to work in a development environment, it was necessary to prepare a safe database dump. It was a huge database. Over a hundred tables. A dump was needed to have some starting point for local development. First and foremost, anonymization, we had to go through all the tables and locate critical data that would be troublesome to come out. Another thing is data thinning. Out of several gigabytes of data, it was necessary to leave a maximum of tens of megabytes (because it will end up in the repository). Database relations were the problem, of course. This was a legacy, obviously not always the necessary foreign keys were added, so we had to be careful. Once the DB dump was prepared, we added it to the repository and prepared a script (in this case, it was phing) to load it into the database on the project setup.

Migrations

To manage database changes in a more predictable way, we decided to use Doctrine Migrations. But to be able to use migrations, we needed to have tables mapped to objects (ORM). I don't know if anyone would be able to map manually hundreds of tables to mapped objects without bugs. Luckily we didn't have to, Doctrine has a script ready for this (reverse engineering - yes, we started with SF 2.8 - it was already some time ago).

If you think that after that I ran the command that generates the migration and it was empty (it should be because we didn't want to change anything at this point, only map to objects), you are wrong. This script can't handle everything. It cannot cope with some data types (eg. tinyint is mapped to type boolean - regardless of length), some default values (eg. "0000-00-00 00:00:00" in datetime), and sometimes with relations (eg. stubbornly tries to rename foreign keys). So initially, such a migration, although it should be empty, contained several dozen changes that we did not make, it was necessary to review and decide what to do with them. We had to get used to some of them in the initial phase, if someone did not write the migration himself but generated them, he had to start by removing those changes that he did not make. And if someone forgot, it was remembered in the code review.

From now on, each change in the database will be implemented using Doctrine Migrations.

Tests

We decided to protect ourselves and write tests for basic business paths. It was not that simple, the project had no documentation, there were so many unused files that no one was able to tell what functionality it had at all. I dare to assume that most of the files in the "/legacy" directory weren't used at all. Even our client did not know about many of the existing functionalities in his system.

At the beginning, there was not much time for testing, it was practically no time for that. Tests are not what the client came for, he expected bug fixes and new functionalities, not days spent writing tests. It was just for us, for a quiet job.

So what to test? We decided to test what the client recognized as key functionalities. For the client, most of the functionality is crucial, we had to use our intuition. Of course, no one is able to write tests for an unknown existing system that will cover everything, having no budget for it. We used PHPUnit. We have also added smoke tests (does it return "200"?) to most of the subpages that we managed to find by clicking.

Secrets

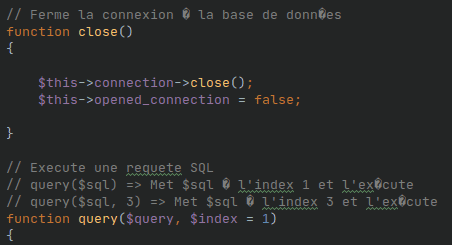

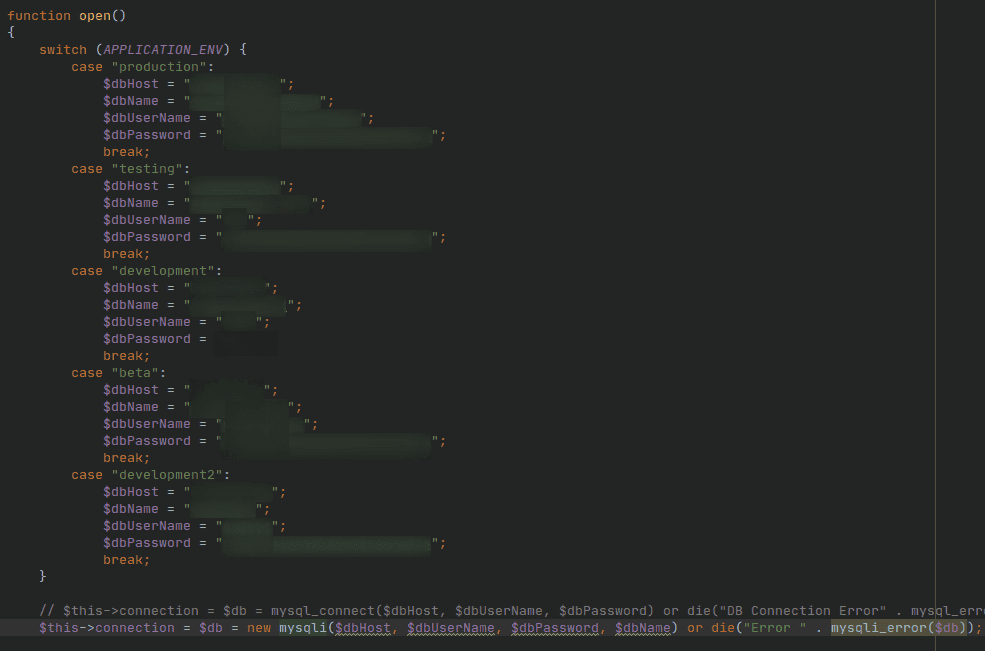

What's next? What most people don't like to do, some urgent clean up the legacy. I will show an example, a database connection configuration:

As you can see, many "secrets" have been hardcoded. And the way it was done, it cried out to heaven for vengeance.

We decided to change this way of configuration to environment variables. There were many secrets in the system, often hidden among files with several thousand lines, defined wherever they were needed, they had to be found and replaced with environment variables. It's not a pleasant job, but it has to be done.

Security issues

The next step was to secure critical security issues. Legacy code was in poor shape from that point of view. Inserting URL parameters straight into SQL queries? Of course. I think I could write an article "Top 20 OWASP Vulnerabilities" and illustrate each subsection with examples we found in the code. Yes, it was that bad. We spent a lot of time finding and patching security issues.

When we told the client about the scale of the problem, we managed to persuade him to perform penetration tests by an external company that specializes in it. The several dozen pages report we received was very helpful.

My knowledge of security has grown significantly during this time. It hit me so much that later, for fun, I did "pentests" (it's easy when you have access to the code :)) of our other projects, upsetting my colleagues from other teams.

Continuous integration / Continuous delivery

As we are working on this project on Gitlab, you probably won't be surprised when I say that we used Gitlab CI. Here it was classic, we've added a few steps for build after commit, checking tests etc. Of course, most of CI's scripts were defined for the new part (which was still not doing anything at this point). After all, we will not run static legacy code analysis like phpstan, php-cs-fixer, phpmd etc, because the build would never pass, legacy php was a mess.

The docker images ready for deployment were built on the pushed git tag. We used the Rancher tool for test/stage environments.

We also used Capistrano for RC and production deployment. No more FTP deployment. What has it given to us? The possibility of quick rollback, speed, and certainty of deployed changes.

What about session and users?

As "new" and "legacy" are two separate docker containers, we had to choose some way to have information about the logged in user in the "new" part.

The first solution was a separate memcached container with users session that was shared between "new" and "legacy".

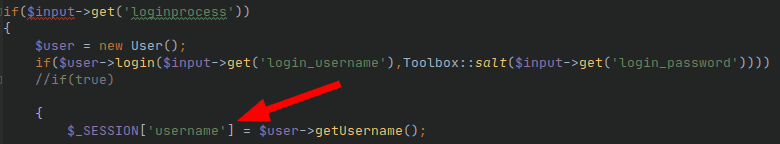

The legacy php part did not need to be changed:

The logged in user's username was already stored in the session (I am also puzzled by this comment "if true"). All we had to do was get to it in the new part.

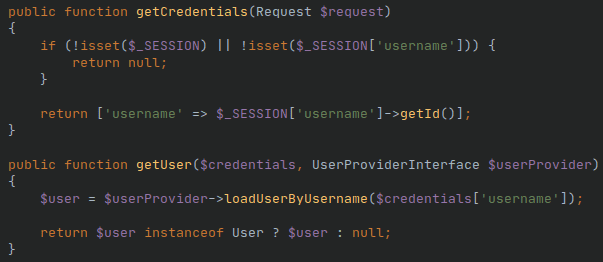

So we used Symfony Guard. It is a cool and easy-to-use authentication mechanism.

The new part, having access to the username of the logged in user, can thus simply authorize him.

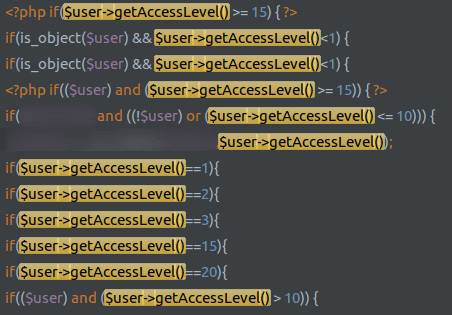

No, it wasn't that simple ;). In the old part, there was a complicated access control system based on numbers (sic!). Users had numbers in the database, each number meant a different level of authorization. Of course, this wasn't documented anywhere, and we had to take the time to understand what number means what permissions and recreate that in the new part on Symfony user roles.

Seriously, I'm not kidding, in legacy application, it looked like this ("ctrl+f" results below):

First tickets

Of course, the first tasks were all about hotfixes. Before the client came to us, he did a little research, bounced off a few companies that did not want to work with legacy SaaS. So, the project was running without technical support for some time. When the client came to us, the queue of errors to be fixed was already long. This is unfortunately a job that is not a strangler pattern, It is just a simple SaaS refactoring.

But at this point, we had the whole development setup ready to work with.

The problems were like with any legacy application. It was necessary to patch urgent bugs and optimize the code in some parts. We mainly used Blackfire and MySQL Slow Query Log to monitor and look for the causes of problems.

First new feature

But aside from improving performance and reliability, competition never sleeps. When we fixed the most urgent bugs, we started working on the new functionality in parallel with fixing the other bugs in legacy code. It's finally time for what everyone likes. It's time to go wild on the greenfield, our new part.

There were a few things that limited us. The legacy had some layout. To make the whole system consistent, we had to recreate these styles and page structure in the new part. This had some consequences. For example, adding a new item to a menu had to be done in both parts. It became necessary to maintain two systems.

Okay, we had CSS and layout in the new part. We could start working. Could we? Yes! We decided to prefix the paths for the new part. So our Reverse Proxy started redirecting paths starting with "/app/" to the Symfony container.

The user logged in, entered the system dashboard, a new item "Some new feature" appeared in the menu. So far, everything has happened in the legacy. But when he clicked on that new item in the menu he was redirected to "/app/some-new-feature". Reverse Proxy recognized the prefix "/app/" and routes the request to the new part of the system. We have it! Strangler pattern in practice.

Getting rid of legacy

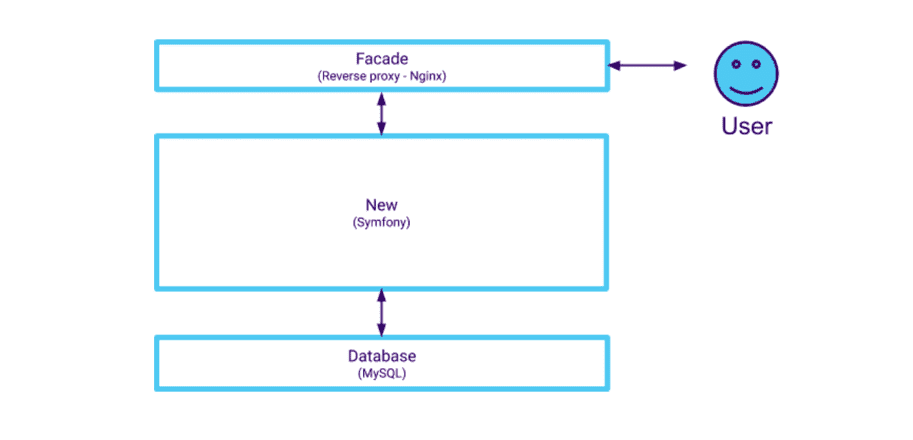

In each iteration, we tried to replace some legacy. feature with a new one. We wanted to change the size proportions of the boxes new and legacy below with every release and make the box legacy finally disappear and we will be left with new.

For example, logging into the system was one of the first. Remember how it worked so far? The user logged in in legacy, his username was saved in the session (memcached) and the username in the new part was taken from the session, after which the user could be authorized in both. Now we had to reverse this mechanism. By the way, we decided to stop using the memcached session and replace it with the JWT token passed in the cookie. This was also necessary later to be able to scale the app in the Cloud (sessionless authentication).

In functionalities, not as crucial as logging in we used feature flag and canary releasing. We didn't use any fancy tools for the feature flags, simple bool in the database, feature enabled or not. Switching functionality from legacy to new sometimes involved migrating data from old legacy tables to the new database structure, so we did this for specific groups of users. After that, we switched the feature flag for those users. For users, this usually revealed a slightly refreshed layout of the old functionality. If everything was fine, we were gradually migrating data to subsequent users until everyone was using the new feature, and we could remove the legacy code and that feature flag at all.

Do you need to rewrite EVERY legacy functionality?

Of course not. But the client never wants to get rid of any functionality. So we used the user behavior tracking tools. And it was an enlightening experience, especially for the client. It turned out that some functionalities that the client considered to be one of the most important ones, were completely unused by users! Why waste energy and money on parts of the system that are not used by anyone?

By using the feature flags, we were gradually hiding some unused legacy functionalities from users. If nobody complained, we just removed them.

Scalability

Sometimes a product becomes popular. Traffic is increasing, we are opening up to new markets. We have come to the point where we had to migrate to the cloud. We choose Google Cloud and Kubernetes. The fact that we used the docker from the beginning was helpful. We had sessionless authentication, filesystem abstraction, all infrastructure in docker, we were cloud-ready, so that's happened.

So here we are today.

This week I finally deleted the legacy directory and its docker container.

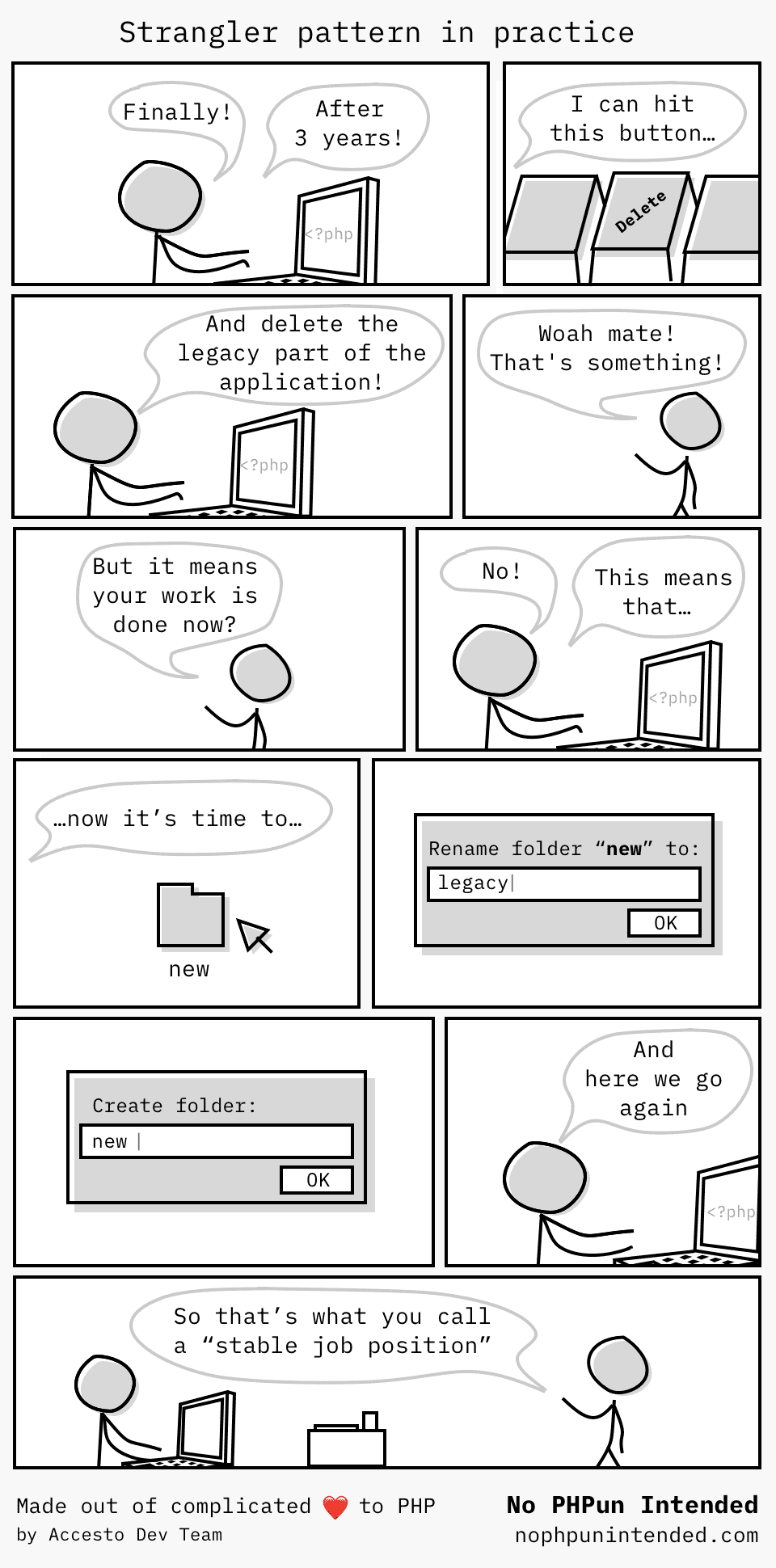

Seriously, it inspired me to write this article as a remembrance of this event. We are with a product that works fully on our principles. The day has come when our "new" has become "new legacy". I think we can wrap this up with the ideal No PHPun Intended comic about Strangler Pattern:

How's your Legacy?

Legacy is not that bad if you get it right. If you are still not comfortable with your Legacy, feel free to write to us, maybe we can help you. And if you are wondering whether strangler pattern is a good approach for your project, check the article of our CTO on pros and cons of the strangler pattern approach!