Docker networks explained - part 2: docker-compose, microservices, chaos monkey

In my previous article on docker networks, I touched on the basics of network management using the docker CLI. But in real life, you probably won't work this way, and you will have all the containers needed to be orchestrated by a docker-compose file.

This is where this article comes into play - let's see how to use networks in real life. Covering the basics of network management with docker-compose, how to use networks for multi-repository/multi-docker-compose projects, microservices and also how to make use of them to test different issue scenarios like limited network throughput, lost packets etc.

Let's start with some very basic stuff, if you are already familiar with docker-compose you might want to skip some sections below.

Docker-compose basics

Exposing ports with docker-compose

The first thing you might want to do is to simply expose a port:

version: '3.6'

services:

phpmyadmin:

image: phpmyadmin

ports:

- 8080:80For those of you new to Docker - expose means to open a port to the outside world. You can limit it by IP, but by default, this will mean everyone can access it. The port is exposed on your network interface, not the container one. In the above example, you can access port 80 of PhpMyAdmin container on your port 8080 (localhost:8080).

As you can see, it's pretty simple, you just pass the ports to be exposed, following the same idea as in docker CLI, so localPort:containerPort. Adding the listening interface for the local port is obviously also possible:

version: '3.6'

services:

phpmyadmin:

image: phpmyadmin

ports:

- 127.0.0.1:8080:80This might be handy when you do not want to expose some services to the outside.

Connecting containers within a docker-compose file

As mentioned in the docker networks post, docker-compose creates a default network. You can easily check this by creating a very simple docker-compose config file:

version: '3.6'

services:

db:

image: mariadb:10.3

environment:

MYSQL_ROOT_PASSWORD: secret

phpmyadmin:

image: phpmyadmin

restart: always

ports:

- 8080:80

environment:

- PMA_HOSTS=dbLet's run it and see what happens:

docker-compose up -d

Creating network "myexampleproject_default" with the default driver

Pulling db (mariadb:10.3)...

(...)And as you might have noticed in the first line, a default docker network called myexampleproject_default is created for this project. It is also visible in the docker CLI:

docker network ls

NETWORK ID NAME DRIVER SCOPE

a9979ee462fb bridge bridge local

d8b7eab3d297 myexampleproject_default bridge local

17c76d995120 host host local

8224bb92dd9b none null localAll containers from this docker-compose.yaml are connected to this docker network. This means, they can easily talk to each other and hosts are resolved by name:

docker-compose exec phpmyadmin bash

root@b362dbe238ac:/var/www/html# getent hosts db

172.18.0.3 dbAs you should remember from the previous post on docker networks, you are able to connect services that are in the same network.

Docker-compose network and microservice-oriented architecture

When writing a microservice-oriented project, it's very handy to be able to simulate at least a part of the production approach in the development environment. This includes separating and connecting groups of containers but also testing network latency issues, connectivity problems etc.

Dividing containers into custom networks

But what if you DO NOT want the containers to be able to talk to each other? Maybe you are writing a system where one part should be hidden from the other one. In practice, such parts of the system are separated using AWS VPC or similar mechanisms, but it would be nice to test this on a development machine, right?

No problem, let's take a look at this config file:

version: '3.6'

services:

service1-db:

image: mariadb:10.3

environment:

MYSQL_ROOT_PASSWORD: secret

service1-web:

image: nginxdemos/hello

ports:

- 80:80

service2-db:

image: mariadb:10.3

environment:

MYSQL_ROOT_PASSWORD: secret

service2-web:

image: nginxdemos/hello

ports:

- 81:80As you can see, we have two separate services, each consisting of a web application and db container. We would like to make db accessible only from their web service, so service2-web is not able to access service1-db directly.

Let's check how it works now:

docker-compose exec service1-web ash

/ # getent hosts service1-db

172.19.0.2 service1-db service1-db

/ # getent hosts service2-db

172.19.0.5 service2-db service2-dbUnfortunately, the services are not separated in the way we would like them to be.

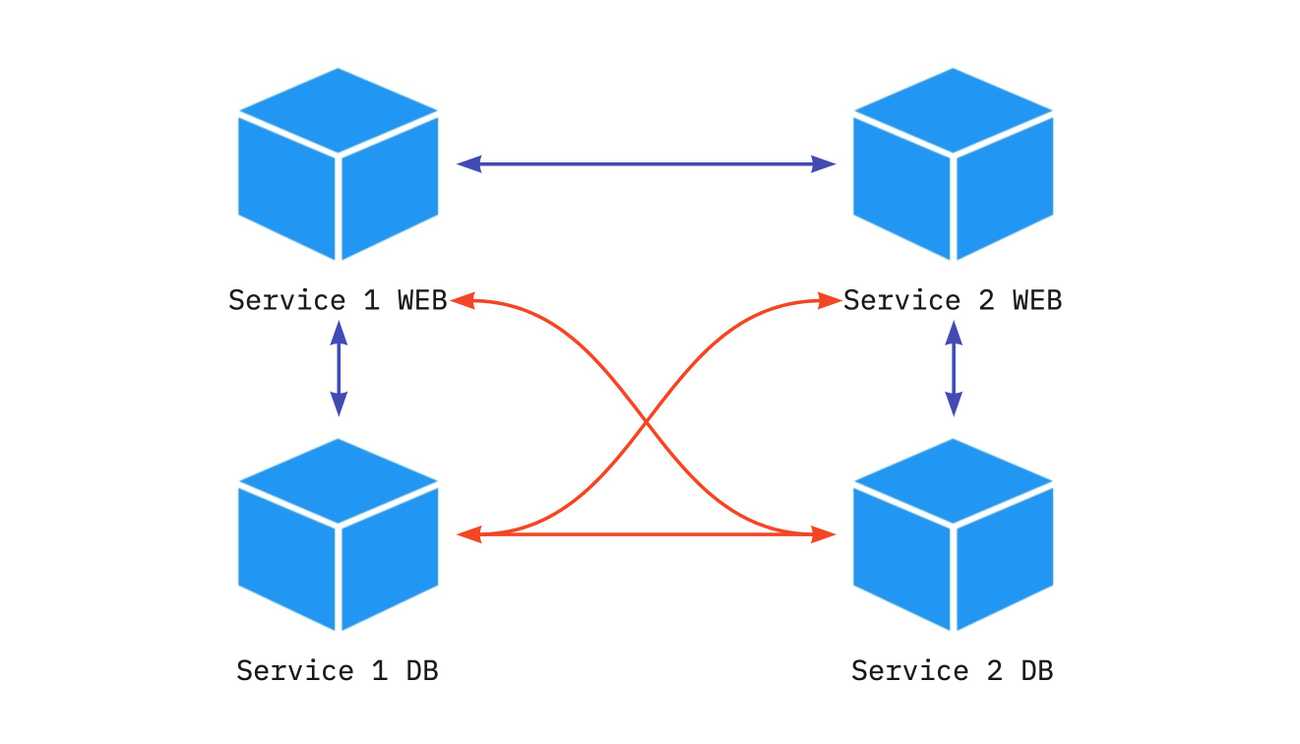

What we would like to achieve here is to drop the connections marked as red on the image, and only keep the black ones. So each service (web and db containers of it) should have its own networks.

No worries, this can be achieved by adding very simple changes:

No worries, this can be achieved adding very simple changes:

version: '3.6'

services:

service1-db:

image: mariadb:10.3

environment:

MYSQL_ROOT_PASSWORD: secret

networks:

- service1

service1-web:

image: nginxdemos/hello

ports:

- 80:80

networks:

- service1

- web

service2-db:

image: mariadb:10.3

environment:

MYSQL_ROOT_PASSWORD: secret

networks:

- service2

service2-web:

image: nginxdemos/hello

ports:

- 81:80

networks:

- service2

- web

networks:

service1:

service2:

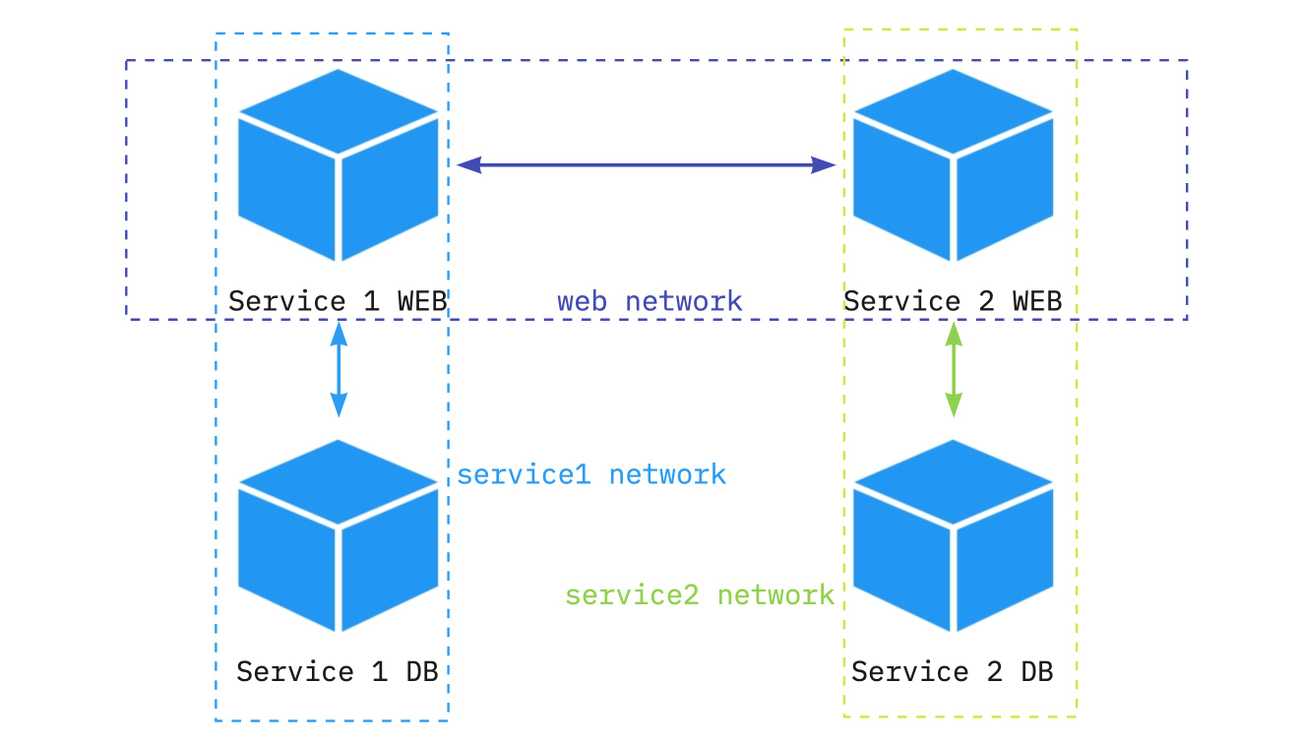

web:We have introduced three different networks (lines 30-31) - one for each service and a shared one for web services. Why do we need the third one? It is required in order to allow communication between service1-web and service2-web. We also added network configuration for each of the services (lines 7-8, 13-15, 20-21, 26-28). Right now, web services are in two custom networks, and db services are only in one custom network.

Let's check how service1-web resolves the names now:

dco exec service1-web ash

/ # getent hosts service2-web

172.22.0.2 service2-web service2-web

/ # getent hosts service2-db

/ # getent hosts service1-db

172.20.0.3 service1-db service1-dbAs you can see, we can quite easily achieve a separation between containers by introducing networks and connecting together only selected containers.

Keep in mind that a container has different IP address in each of the networks it is in.

PS. the default network is still there, and you can use it. I prefer to create separate ones, so the network name clearly states what it is for.

Connecting containers between multiple docker-compose files

Quite often, such projects as the above one are split between git repositories, or at least between docker-compose.yaml files. So a developer can launch each of the services separately. How can we connect such services? Let's take a look.

Let's assume we decided to split the previously used project into two separate repositories. One for service1, and a second one for service2. This would mean we have two docker-compose.yaml files:

#service1/docker-compose.yaml

version: '3.6'

services:

service1-db:

image: mariadb:10.3

environment:

MYSQL_ROOT_PASSWORD: secret

service1-web:

image: nginxdemos/hello

ports:

- 80:80#service2/docker-compose.yaml

version: '3.6'

services:

service2-db:

image: mariadb:10.3

environment:

MYSQL_ROOT_PASSWORD: secret

service2-web:

image: nginxdemos/hello

ports:

- 81:80If we run docker compose on both configurations, service1-web and service2-web won't be able to communicate with each other, as they will be added to two different networks: each docker-compose.yaml file creates its own network by default.

docker-compose up -d

Creating network "service1_default" with the default driver

Creating service1_service1-web_1 ... done

Creating service1_service1-db_1 ... doneLet's start with adding back the network configuration for service1 with a small change:

version: '3.6'

services:

service1-db:

image: mariadb:10.3

environment:

MYSQL_ROOT_PASSWORD: secret

networks:

- service1

service1-web:

image: nginxdemos/hello

ports:

- 80:80

networks:

- service1

- web

networks:

service1:

web:

name: shared-webWe added a bit of configuration in line 20. In this case, I wanted to give my web network a fixed name. By default, the name consists of PROJECTNAME_NETWORKNAME, and project name by default is the directory name. The directory we are in might have different names for different developers, so the safe option to go is to enforce this name.

Now, for service2, we need to act a bit differently:

version: '3.6'

services:

service2-db:

image: mariadb:10.3

environment:

MYSQL_ROOT_PASSWORD: secret

networks:

- service2

service2-web:

image: nginxdemos/hello

ports:

- 81:80

networks:

- service2

- web

networks:

service2:

web:

external: true #needs to be created by other file

name: shared-webAs you can see in lines 20-21, in this case, we configure an external network. This means, docker-compose won't try to create it and will fail if it is not available. But if a network with the provided name exists, it will reuse the existing network for its services.

That's it. Service1 and 2 web containers can reach each other, but their databases are separated. Both can also be developed in separate repositories.

As an extension to the above, you can take a look at container aliases to make routing easier, or internal to even more isolate services.

Chaos testing

As you know, when it comes to an outage, the question is not if it will happen, but when. It's always better to prepare for such scenarios and test how the system behaves in case of different issues. What will happen if some packets are dropped, or the latency goes up? Maybe a service goes offline?

Chaos testing is all about getting prepared for this.

I highly recommend looking at Pumba a project that lets you pause services and kill them, but also adds network delay, loss, corruption etc.

Fully describing Pumba would take lots of time, so let's just have a look at a very simple network delay simulation.

Let's spin up a container that pings 8.8.8.8:

docker run -it --rm --name demo-ping alpine ping 8.8.8.8Now, looking at the output, run the following command in a separate console tab:

pumba netem --duration 5s --tc-image gaiadocker/iproute2 delay --time 3000 demo-ping

And that's it!

You can also implement other chaos tests within minutes.

Summary

Docker and docker-compose are great tools to emulate different network configurations without the need for setting up servers or virtual machines. The commands and configs are pretty easy and simple to use. Combined with external tools like Pumba you can also test problematic situations and prepare for outages.

Update: I've published a new article describing reverse proxy server set-up with TLS termination and load balancing

If you are interested in Docker, check out my e-book: Docker deep dive