Docker - Basic Concepts

If you are not familiar with containerization, then here are the most common benefits that make it worth digging deeper into this concept:

- Docker allows you to build an application once and then execute it in all your environments no matter what the differences between them.

- Docker helps you to solve dependency and incompatibility issues.

- Docker is like a virtual machine without the overhead.

- Docker environments can be fully automated.

- Docker is easy to deploy.

- Docker allows for separation of duties.

- Docker allows you to scale easily.

- Docker has a huge community.

Let's start with a quote from the Docker page:

Docker containers wrap up a piece of software in a complete filesystem that contains everything it needs to run: code, runtime, system tools, system libraries – anything you can install on a server. This guarantees that it will always run the same, regardless of the environment it is running in.

We also have a whole dedicated article with arguments for and against the usage of Docker.

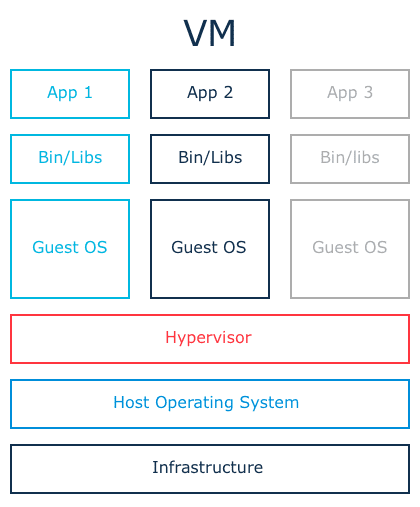

This might sound familiar: virtualization allows you to achieve pretty much the same goals but in contrast to virtualization, Docker runs all processes directly on the host operating system. This helps to avoid the overhead of a virtual machine (both performance and maintenance). Docker achieves this using the isolation features of the Linux kernel such as Cgroups and kernel namespaces. Each container has its own process space, filesystem and memory. You can run all kinds of Linux distributions inside a container. What makes Docker really useful is the community and all projects that complement the main functionality. There are multiple tools to automate common tasks, orchestrate and scale containerized systems. Docker is also heavily supported by many companies, just to name a couple: Amazon, Google, Microsoft. Currently, Docker also allows us to run Windows inside containers (only on Windows hosts).

DOCKER BASICS

Before we dig into using Docker for the Microservices architecture let’s browse the top-level details of how it works.

- Image - holds the file system and parameters needed to run an application. It does not have any state and it does not change. You can understand an image as a template used to run containers.

- Container - this is a running instance of an image. You can run multiple instances of the same image. It has a state and can change.

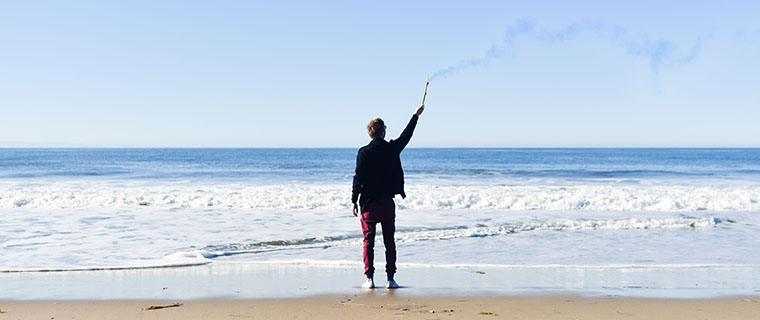

- Image layer - each image is built out of layers. Images are usually built by running commands or adding/modifying files (using a Dockerfile). Each step that is run in order to build an Image is an image layer. Docker saves each layer, so when you run a build next time, it is able to reuse the layers that did not change. Layers are shared between all images so if two images start with similar steps, the layers are shared between them. You can see this illustrated below:

As you can see, all compared images share common layers. So if you download one of them, the shared layers will not be downloaded and stored again when downloading a different image. In fact, changes in a running container are also seen as an additional, uncommitted layer.

As you can see, all compared images share common layers. So if you download one of them, the shared layers will not be downloaded and stored again when downloading a different image. In fact, changes in a running container are also seen as an additional, uncommitted layer. - Registry - a place where images and image layers are kept. You can build an image on your CI server, push it to a registry and then use the image from all of your nodes without the need to build the images again.

- Orchestration (docker-compose) - usually a system is built of several or more containers. This is because you should have only one concern per container. Orchestration allows you to run a multi-container application much easier and docker-compose is the most commonly used tool to achieve that. It has the ability to run multiple containers that can be connected with networks and share volumes.

VM VS. CONTAINER

As mentioned earlier, Docker might seem similar to virtual machines but works in an entirely different way. Virtual machines work exactly as the name suggests: by creating a virtualized machine that the guest system is using. The main part is a Hypervisor running on the host system and granting access to all kinds of resources for the guest systems. On top of the Hypervisor, there are Guest OS’s running on each virtual machine. Your application is using this Guest OS.

What Docker does differently is directly using the host system (no need for Hypervisor and Guest OS), it runs the containers using several features of the Linux kernel that allow them to securely separate the processes inside them. Thanks to this, a process inside the container cannot influence processes outside of it. This approach makes Docker more lightweight both in terms of CPU/Memory usage, and disk space usage.

PS. We have a lot of articles on Docker — check them out.